Python API Reference#

- stbt.android.adb(args, **subprocess_kwargs) CompletedProcess#

Send commands to an Android device using ADB.

This is a convenience function. It will construct an

AdbDevicewith the default parameters (taken from your config files) and callAdbDevice.adbwith the parameters given here.

- class stbt.android.AdbDevice(address=None, adb_server=None, adb_binary=None, tcpip=None)#

Send commands to an Android device using ADB.

Default values for each parameter can be specified in your “stbt.conf” config file under the “[android]” section.

- Parameters:

address (string) – IP address (if using Network ADB) or serial number (if connected via USB) of the Android device. You can get the serial number by running

adb devices -l. If not specified, this is read from “device_under_test.ip_address” in your Node-specific configuration files.adb_server (string) – The ADB server (that is, the PC connected to the Android device). Defaults to localhost.

adb_binary (string) – The path to the ADB client executable. Defaults to “adb”.

tcpip (bool) – The ADB server communicates with the Android device via TCP/IP, not USB. This requires that you have enabled Network ADB access on the device. Defaults to True if

addressis an IP address, False otherwise.

Note

Currently, the Stb-tester Nodes don’t support ADB over USB. You must enable Network ADB on the device-under-test, and you must configure the IP address of the device-under-test by specifying

device_under_test.ip_addressin the Node-specific configuration files for each Stb-tester Node.- adb(

- args,

- *,

- timeout=None,

- **subprocess_kwargs,

Run any ADB command.

For example, the following code will use “adb shell am start” to launch an app on the device:

d = AdbDevice(...) d.adb(["shell", "am", "start", "-S", "com.example.myapp/com.example.myapp.MainActivity"])

Any keyword arguments are passed on to

subprocess.run.- Returns:

- Raises:

subprocess.CalledProcessErrorifcheckis true and the adb process returns a non-zero exit status.- Raises:

subprocess.TimeoutExpirediftimeoutis specified and the adb process doesn’t finish within that number of seconds.- Raises:

stbt.android.AdbErrorifadb connectfails.

- get_frame(coordinate_system=None) Frame#

Take a screenshot using ADB.

If you are capturing video from the Android device via another method (namely, HDMI capture) sometimes it can be useful to capture a frame via ADB for debugging. This function will manipulate the ADB screenshot (scale and/or rotate it) to match the screenshots from your main video-capture method as closely as possible.

- Returns:

A

stbt.Frame, that is, an image in OpenCV format. Note that thetimeattribute won’t be very accurate (probably to <0.5s or so).

- press(key) None#

Send a keypress.

- Parameters:

key (str) – An Android keycode as listed in <https://developer.android.com/reference/android/view/KeyEvent.html>. Also accepts standard Stb-tester key names like “KEY_HOME” and “KEY_BACK”.

- swipe(start_position, end_position) None#

Swipe from one point to another point.

- Parameters:

start_position – A

stbt.Regionor (x, y) tuple of coordinates at which to start.end_position – A

stbt.Regionor (x, y) tuple of coordinates at which to stop.

Example:

d.swipe((100, 100), (100, 400))

- tap(position) None#

Tap on a particular location.

- Parameters:

position – A

stbt.Region, or an (x,y) tuple.

Example:

d.tap((100, 20)) d.tap(stbt.match(...).region)

- logcat(filename='logcat.log', logcat_args=None)#

Run

adb logcatand stream the logs tofilename.This is a context manager. See Capturing logs from the device-under-test for the recommended way to use it.

- Parameters:

filename (str) – Where the logs are written.

logcat_args (list) – Optional arguments to pass on to

adb logcat, such as filter expressions. For example:logcat_args=["ActivityManager:I", "MyApp:D", "*:S"]. See the logcat documentation.

- exception stbt.android.AdbError#

Bases:

ExceptionException raised by

AdbDevice.adb.- Variables:

returncode (int) – Exit status of the adb command.

cmd (list) – The command that failed, as given to

AdbDevice.adb.output (str) – The output from adb.

devices (str) – The output from “adb devices -l” (useful for debugging connection errors).

- class stbt.AppleTV(address: str | None = None)#

Control an AppleTV device using pyatv.

pyatv is an open source tool for controlling Apple TV devices. It uses AirPlay and other network protocols supported by the Apple TV. This class makes it easy to use pyatv in Stb-tester test scripts.

pyatv has additional dependencies that are not installed by default in the Stb-tester v34 environment. For instructions on how to install these dependencies, and how to configure the Apple TV’s IP address, see Controlling Apple TV with pyatv.

Warning

We have done the “integration” work to make pyatv work on the Stb-tester Node, but we are not the authors of pyatv, and we don’t provide support for bugs in pyatv itself.

- Parameters:

address (str) – The IP address of the Apple TV device. If not specified, this is read from “device_under_test.ip_address” in your Node-specific configuration files.

- launch_app(name: str)#

Launches the specified app.

- Parameters:

name – The name or bundle ID of the app (for example “Netflix” or “com.netflix.Netflix”).

- stbt.apply_ocr_corrections( ) str#

Applies the same corrections as

stbt.ocr’scorrectionsparameter.This is available as a separate function so that you can use it to post-process old test artifacts using new corrections.

- stbt.as_precondition(message)#

Context manager that replaces test failures with test errors.

Stb-tester’s reports show test failures (that is,

UITestFailureorAssertionErrorexceptions) as red results, and test errors (that is, unhandled exceptions of any other type) as yellow results. Note thatwait_for_match,wait_for_motion, and similar functions raise aUITestFailurewhen they detect a failure. By running such functions inside anas_preconditioncontext, anyUITestFailureorAssertionErrorexceptions they raise will be caught, and aPreconditionErrorwill be raised instead.When running a single testcase hundreds or thousands of times to reproduce an intermittent defect, it is helpful to mark unrelated failures as test errors (yellow) rather than test failures (red), so that you can focus on diagnosing the failures that are most likely to be the particular defect you are looking for. For more details see Test failures vs. errors.

- Parameters:

message (str) – A description of the precondition. Word this positively: “Channels tuned”, not “Failed to tune channels”.

- Raises:

PreconditionErrorif the wrapped code block raises aUITestFailureorAssertionError.

Example:

def test_that_the_on_screen_id_is_shown_after_booting(): channel = 100 with stbt.as_precondition("Tuned to channel %s" % channel): mainmenu.close_any_open_menu() channels.goto_channel(channel) power.cold_reboot() assert channels.is_on_channel(channel) stbt.wait_for_match("on-screen-id.png")

- stbt.audio_chunks(time_index=None)#

Low-level API to get raw audio samples.

audio_chunksreturns an iterator ofAudioChunkobjects. Each one contains 100ms to 5s of mono audio samples (seeAudioChunkfor the data format).audio_chunkskeeps a buffer of 10s of audio samples.time_indexallows the caller to access these old samples. If you read from the returned iterator too slowly you may miss some samples. The returned iterator will skip these old samples and silently re-sync you at -10s. You can detect this situation by comparing the.end_timeof the previous chunk to the.timeof the current one.- Parameters:

time_index (int or float) – Time from which audio samples should be yielded. This is an epoch time compatible with

time.time(). Defaults to the current time as given bytime.time().- Returns:

An iterator yielding

AudioChunkobjects- Return type:

Iterator[AudioChunk]

- class stbt.AudioChunk#

A sequence of audio samples.

An

AudioChunkobject is what you get fromaudio_chunks. It is a subclass ofnumpy.ndarray. AnAudioChunkis a 1-D array containing audio samples in 32-bit floating point format (numpy.float32) between -1.0 and 1.0.In addition to the members inherited from

numpy.ndarray,AudioChunkdefines the following attributes:- Variables:

time (float) – The wall-clock time of the first audio sample in this chunk, as number of seconds since the unix epoch (1970-01-01T00:00:00Z). This is the same format used by the Python standard library function

time.time.rate (int) – Number of samples per second. This will typically be 48000.

duration (float) – The duration of this audio chunk in seconds.

end_time (float) –

time+duration.

AudioChunksupports slicing using Python’s[x:y]syntax, so the above attributes will be updated appropriately on the returned slice.

- class stbt.BGRDiff( )#

Compares 2 frames by calculating the color distance between them.

The algorithm calculates the euclidean distance in BGR colorspace between each pair of corresponding pixels in the 2 frames. This distance is then binarized using the specified threshold: Values smaller than the threshold are ignored. Then, an “erode” operation removes differences that are only 1 pixel wide or high. If any differences remain, the 2 frames are considered different.

This is the default diffing algorithm for

detect_motion,wait_for_motion,press_and_wait,find_selection_from_background, andocr’stext_color.

- class stbt.Color(hexstring: str)#

- class stbt.Color(blue: int, green: int, red: int, /)

- class stbt.Color(blue: int, green: int, red: int, alpha: int | None = None, /)

- class stbt.Color(*, blue: int, green: int, red: int, alpha: int | None = None)

- class stbt.Color(bgr: tuple[int, int, int])

- class stbt.Color(bgra: tuple[int, int, int, int])

- class stbt.Color(color: Color, /)

A BGR color, optionally with an alpha (transparency) value.

A Color can be created from an HTML-style hex string:

>>> Color('#f77f00') Color('#f77f00')

Or from Blue, Green, Red values in the range 0-255:

>>> Color(0, 127, 247) Color('#f77f00')

Note: When you specify the colors in this way, the BGR order is the opposite of the HTML-style RGB order. This is for compatibility with the way OpenCV stores colors.

Any

stbtAPIs that take aColorwill also accept a string or tuple in the above formats, so you don’t need to construct aColorexplicitly.

- stbt.color_diff(

- frame: Frame | None = None,

- *,

- background_color: Color | None = None,

- foreground_color: Color | None = None,

- threshold: float = 0.05,

- erode: bool = False,

Calculate euclidean color distance in a perceptually uniform colorspace.

Calculates the distance of each pixel in

frameagainst the color specified inbackground_colororforeground_color. The output is a binary (black and white) image.- Parameters:

frame (stbt.Frame) – The video frame to process.

background_color (Color) – The color to diff against. Output pixels will be white where the color distance is greater than

threshold. Use this to remove a background of a particular color.foreground_color (Color) – The color to diff against. Output pixels will be white where the color distance is smaller than

threshold. Use this to find a foreground feature of a particular color, such as text or the selection/focus.threshold (float) – Binarization threshold in the range [0., 1.]. Foreground pixels will be set to white, background pixels to black. A value of 0.01 means a barely-noticeable difference to human perception. To disable binarization set

threshold=None; the output will be a grayscale image.erode (bool) – Run the thresholded differences through an erosion algorithm to remove noise or small differences (less than 3px).

- Return type:

- Returns:

Binary (black & white) image, or grayscale image if

threshold=None.

Added in v33.

- class stbt.ConfirmMethod#

An enum. See

MatchParametersfor documentation of these values.- NONE = 'none'#

- ABSDIFF = 'absdiff'#

- NORMED_ABSDIFF = 'normed-absdiff'#

- stbt.crop(frame: Frame, region: Region) Frame#

Returns an image containing the specified region of

frame.- Parameters:

frame (

stbt.Frameornumpy.ndarray) – An image in OpenCV format (for example as returned byframes,get_frameandload_image, or theframeparameter ofMatchResult).- Returns:

An OpenCV image (

numpy.ndarray) containing the specified region of the source frame. This is a view onto the original data, so if you want to modify the cropped image call itscopy()method first.

- stbt.detect_motion(

- timeout_secs: float = 10,

- noise_threshold: int | None = None,

- mask: Mask | Region | str = Region.ALL,

- region: Region = Region.ALL,

- frames: Iterator[Frame] | None = None,

Generator that yields a sequence of one

MotionResultfor each frame processed from the device-under-test’s video stream.The

MotionResultindicates whether any motion was detected.Use it in a

forloop like this:for motionresult in stbt.detect_motion(): ...

In most cases you should use

wait_for_motioninstead.- Parameters:

timeout_secs (int or float or None) – A timeout in seconds. After this timeout the iterator will be exhausted. Thas is, a

forloop likefor m in detect_motion(timeout_secs=10)will terminate after 10 seconds. Iftimeout_secsisNonethen the iterator will yield frames forever. Note that you can stop iterating (for example withbreak) at any time.noise_threshold (int) –

The difference in pixel intensity to ignore. Valid values range from 0 (any difference is considered motion) to 255 (which would never report motion).

This defaults to 25. You can override the global default value by setting

noise_thresholdin the[motion]section of .stbt.conf.mask (str|numpy.ndarray|Mask|Region) – A

Regionor a mask that specifies which parts of the image to analyse. This accepts anything that can be converted to a Mask usingstbt.load_mask. See Regions and Masks.region (Region) – Deprecated synonym for

mask. Usemaskinstead.frames (Iterator[stbt.Frame]) – An iterable of video-frames to analyse. Defaults to

stbt.frames().

Changed in v33:

maskaccepts anything that can be converted to a Mask usingload_mask. Theregionparameter is deprecated; pass yourRegiontomaskinstead. You can’t specifymaskandregionat the same time.Changed in v34: The difference-detection algorithm takes color into account. The

noise_thresholdparameter changed range (from 0.0-1.0 to 0-255), sense (from “bigger is stricter” to “smaller is stricter”), and default value (from 0.84 to 25).

- stbt.detect_pages(frame=None, candidates=None, test_pack_root='')#

Find Page Objects that match the given frame.

This function tries each of the Page Objects defined in your test-pack (that is, subclasses of

stbt.FrameObject) and returns an instance of each Page Object that is visible (according to the object’sis_visibleproperty).This is a Python generator that yields 1 Page Object at a time. If your code only consumes the first object (like in the example below),

detect_pageswill try each Page Object class until it finds a match, yield it to your code, and then it won’t waste time trying other Page Object classes:page = next(stbt.detect_pages())

To get all the matching pages you can iterate like this:

for page in stbt.detect_pages(): print(type(page))

Or create a list like this:

pages = list(stbt.detect_pages())

- Parameters:

frame (stbt.Frame) – The video frame to process; if not specified, a new frame is grabbed from the device-under-test by calling

stbt.get_frame.candidates (Sequence[Type[stbt.FrameObject]]) – The Page Object classes to try. Note that this is a list of the classes themselves, not instances of those classes. If

candidatesisn’t specified,detect_pageswill use static analysis to find all of the Page Objects defined in your test-pack.test_pack_root (str) – A subdirectory of your test-pack to search for Page Object definitions, used when

candidatesisn’t specified. Defaults to the entire test-pack.

- Return type:

Iterator[stbt.FrameObject]

- Returns:

An iterator of Page Object instances that match the given

frame.

Added in v32.

- class stbt.Differ#

An algorithm that compares two images or frames to find the differences between them.

Subclasses of this class implement the actual diffing algorithms: See

BGRDiffandGrayscaleDiff.

- class stbt.Direction#

An enumeration.

- HORIZONTAL = 'horizontal'#

Process the image from left to right

- VERTICAL = 'vertical'#

Process the image from top to bottom

- stbt.draw_text(text: str, duration_secs: float = 3) None#

Write the specified text to the output video.

- stbt.find_file(filename: str) str#

Searches for the given filename relative to the directory of the caller.

When Stb-tester runs a test, the “current working directory” is not the same as the directory of the test-pack git checkout. If you want to read a file that’s committed to git (for example a CSV file with data that your test needs) you can use this function to find it. For example:

f = open(stbt.find_file("my_data.csv"))

If the file is not found in the directory of the Python file that called

find_file, this will continue searching in the directory of that function’s caller, and so on, until it finds the file. This allows you to usefind_filein a helper function that takes a filename from its caller.This is the same algorithm used by

load_image.- Parameters:

filename (str) – A relative filename.

- Return type:

- Returns:

Absolute filename.

- Raises:

FileNotFoundErrorif the file can’t be found.

Added in v33.

- stbt.find_regions_by_color(

- color: Color,

- *,

- frame: Frame | None = None,

- threshold: float = 0.05,

- erode: bool = False,

- mask: Mask | Region | str = Region.ALL,

- min_size: SizeT = (20, 20),

- max_size: SizeT | None = None,

- merge_px: int | SizeT = 0,

Find contiguous regions of a particular color.

For a guide to using this API see Finding GUI elements by color.

- Parameters:

frame (stbt.Frame) – The video frame to process.

color (Color) – See the

foreground_colorparameter ofcolor_diff.threshold (float) – See

color_diff.erode (bool) – See

color_diff.mask (str|numpy.ndarray|Mask|Region) – A

Regionor a mask that specifies which parts of the image to analyse. This accepts anything that can be converted to a Mask usingstbt.load_mask. See Regions and Masks.min_size (Size) – Exclude regions that are smaller than this width or height.

max_size (Size) – Exclude regions that are larger than this width or height.

merge_px (int|Size) – Merge nearby regions that are separated by a gap of this many pixels or fewer. This is a tuple of (horizontal gap, vertical gap), or if a single integer is specified, that value is used for both horizontal and vertical gaps. For example, to merge letters within the same word in horizontal text, use

merge_px=(5, 0).

- Return type:

- Returns:

A list of

stbt.Regioninstances.

stbt.find_regions_by_colorwas added in v33.Themerge_pxparameter was added in v34 in October 2025.

- stbt.find_selection_from_background(

- image: Image | str | Color,

- max_size: tuple[int, int],

- min_size: tuple[int, int] | None = None,

- frame: Frame | None = None,

- mask: Mask | Region | str = Region.ALL,

- threshold: float = 25,

- erode: bool = True,

Checks whether

framematchesimage, calculating the region where there are any differences. The region whereframedoesn’t match the image is assumed to be the selection. This allows us to simultaneously detect the presence of a screen (used to implement astbt.FrameObjectclass’sis_visibleproperty) as well as finding the selection.For example, to find the selection of an on-screen keyboard,

imagewould be a screenshot of the keyboard without any selection. You may need to construct this screenshot artificially in an image editor by merging two different screenshots.Unlike

stbt.match,imagemust be the same size asframe.- Parameters:

image –

The background to match against. It can be the filename of a PNG file on disk or an image previously loaded with

stbt.load_image; or astbt.Colorto match a plain background.If it has an alpha channel, any transparent pixels are masked out (that is, the alpha channel is ANDed with

mask). This image must be the same size asframe.max_size – The maximum size

(width, height)of the differing region. If the differences betweenimageandframeare larger than this in either dimension, the function will return a falsey result.min_size – The minimum size

(width, height)of the differing region (optional). If the differences betweenimageandframeare smaller than this in either dimension, the function will return a falsey result.frame – If this is specified it is used as the video frame to search in; otherwise a new frame is grabbed from the device-under-test. This is an image in OpenCV format (for example as returned by

stbt.framesandstbt.get_frame).mask – A

Regionor a mask that specifies which parts of the image to analyse. This accepts anything that can be converted to a Mask usingstbt.load_mask. See Regions and Masks.threshold – Threshold for differences between

imageandframefor it to be considered a difference. This is a colour distance between pixels inimageandframe. 0 means the colours have to match exactly. 255 would mean that even white (255, 255, 255) would match black (0, 0, 0).erode – By default we pass the thresholded differences through an erosion algorithm to remove noise or small anti-aliasing differences. If your selection is a single line less than 3 pixels wide, set this to False.

- Returns:

An object that will evaluate to true if

imageandframematched with a difference smaller thanmax_size. The object has the following attributes:matched (bool) – True if the image and the frame matched with a difference smaller than

max_size.region (

stbt.Region) – The bounding box that contains the selection (that is, the differences betweenimageandframe).mask_region (

stbt.Region) – The region of the frame that was analysed, as given in the function’smaskparameter.image (

stbt.Image) – The reference image given tofind_selection_from_background.frame (

stbt.Frame) – The video-frame that was analysed.

stbt.find_selection_from_backgroundwas added in v32.Changed in v33:

maskaccepts anything that can be converted to a Mask usingload_mask(previously it only accepted aRegion).

- class stbt.Frame#

A frame of video.

A

Frameis what you get fromstbt.get_frameandstbt.frames. It is a subclass ofnumpy.ndarray, which is the type that OpenCV uses to represent images. Data is stored in 8-bit, 3 channel BGR format.In addition to the members inherited from

numpy.ndarray,Framedefines the following attributes:- Variables:

time (float) – The wall-clock time when this video-frame was captured, as number of seconds since the unix epoch (1970-01-01T00:00:00Z). This is the same format used by the Python standard library function

time.time.width (int) – The width of the frame, in pixels.

height (int) – The height of the frame, in pixels.

region (Region) – A

Regioncorresponding to the full size of the frame — that is,Region(0, 0, width, height).

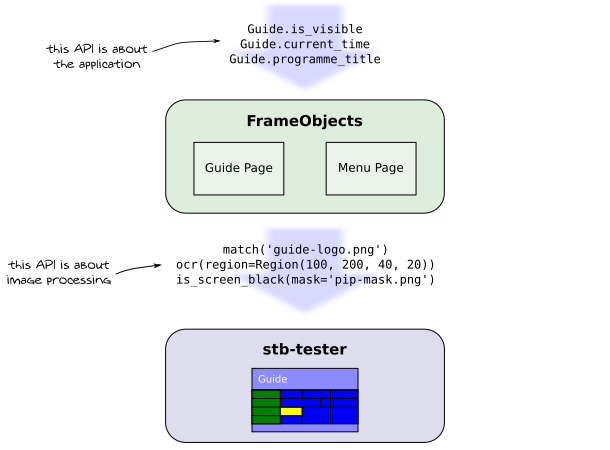

- class stbt.FrameObject#

Base class for user-defined Page Objects.

FrameObjects are Stb-tester’s implementation of the Page Object pattern. A FrameObject is a class that uses Stb-tester APIs like

stbt.match()andstbt.ocr()to extract information from the screen, and it provides a higher-level API in the vocabulary and user-facing concepts of your own application.

Based on Martin Fowler’s PageObject diagram#

Stb-tester uses a separate instance of your FrameObject class for each frame of video captured from the device-under-test (hence the name “Frame Object”). Stb-tester provides additional tooling for writing, testing, and maintenance of FrameObjects.

To define your own FrameObject class:

Derive from

stbt.FrameObject.Define an

is_visibleproperty (using Python’s @property decorator) that returns True or False.Define any other properties for information that you want to extract from the frame.

Inside each property, when you call an image-processing function (like

stbt.matchorstbt.ocr) you must specify the parameterframe=self._frame.

The following behaviours are provided automatically by the FrameObject base class:

Truthiness: A FrameObject instance is considered “truthy” if it is visible. Any other properties (apart from

is_visible) will returnNoneif the object isn’t visible.Immutability: FrameObjects are immutable, because they represent information about a specific frame of video – in other words, an instance of a FrameOject represents the state of the device-under-test at a specific point in time. If you define any methods that change the state of the device-under-test, they should return a new FrameObject instance instead of modifying

self.Caching: Each property will be cached the first time is is used. This allows writing testcases in a natural way, while expensive operations like

ocrwill only be done once per frame.

For more details see Object Repository in the Stb-tester manual.

The FrameObject base class defines several convenient methods and attributes:

- _fields#

A tuple containing the names of the public properties.

- __eq__(other) bool#

Two instances of the same

FrameObjecttype are considered equal if the values of all the public properties match, even if the underlying frame is different. All falsey FrameObjects of the same type are equal.

- __hash__() int#

Two instances of the same

FrameObjecttype are considered equal if the values of all the public properties match, even if the underlying frame is different. All falsey FrameObjects of the same type are equal.

- __init__(frame: Frame | None = None) None#

The default constructor takes an optional frame of video; if the frame is not provided, it will grab a live frame from the device-under-test.

If you override the constructor in your derived class (for example to accept additional parameters), make sure to accept an optional

frameparameter and supply it to the super-class’s constructor.

- __repr__() str#

The object’s string representation shows all its public properties.

We only print properties we have already calculated, to avoid triggering expensive calculations.

- refresh(frame: Frame | None = None, **kwargs) FrameObject#

Returns a new FrameObject instance with a new frame.

selfis not modified.refreshis used by navigation functions that modify the state of the device-under-test.By default

refreshreturns a new object of the same class asself, but you can override the return type by implementingrefreshin your derived class.Any additional keyword arguments are passed on to

__init__.

- stbt.frames(timeout_secs: float | None = None) Iterator[Frame]#

Generator that yields video frames captured from the device-under-test.

For example:

for frame in stbt.frames(): # Do something with each frame here. # Remember to add a termination condition to `break` or `return` # from the loop, or specify `timeout_secs` — otherwise you'll have # an infinite loop! ...

See also

stbt.get_frame.- Parameters:

timeout_secs (int or float or None) – A timeout in seconds. After this timeout the iterator will be exhausted. That is, a

forloop likefor f in stbt.frames(timeout_secs=10)will terminate after 10 seconds. Iftimeout_secsisNone(the default) then the iterator will yield frames forever but you can stop iterating (for example withbreak) at any time.- Return type:

Iterator[stbt.Frame]

- Returns:

An iterator of frames in OpenCV format (

stbt.Frame).

- stbt.get_config( ) T#

- stbt.get_config( ) T | DefaultT

Read the value of

keyfromsectionof the test-pack configuration file.For example, if your configuration file looks like this:

[test_pack] stbt_version = 30 [my_company_name] backend_ip = 192.168.1.23

then you can read the value from your test script like this:

backend_ip = stbt.get_config("my_company_name", "backend_ip")

This searches in the

.stbt.conffile at the root of your test-pack, and in theconfig/test-farm/<hostname>.conffile matching the hostname of the stb-tester device where the script is running. Values in the host-specific config file override values in.stbt.conf. See Configuration files for more details.Test scripts can use

get_configto read tags that you specify at run-time: see Automatic configuration keys. For example:my_tag_value = stbt.get_config("result.tags", "my tag name")

Raises

ConfigurationErrorif the specifiedsectionorkeyis not found, unlessdefaultis specified (in which casedefaultis returned).

- stbt.get_frame() Frame#

Grabs a video frame from the device-under-test.

- Return type:

- Returns:

The most recent video frame in OpenCV format.

Most Stb-tester APIs (

stbt.match,stbt.FrameObjectconstructors, etc.) will callget_frameif a frame isn’t specified explicitly.If you call

get_frametwice very quickly (faster than the video-capture framerate) you might get the same frame twice. To block until the next frame is available, usestbt.frames.To save a frame to disk pass it to

cv2.imwrite. Note that any file you write to the current working directory will appear as an artifact in the test-run results.

- stbt.get_rms_volume(duration_secs=3, stream=None) RmsVolumeResult#

Calculate the average RMS volume of the audio over the given duration.

For example, to check that your mute button works:

stbt.press('KEY_MUTE') time.sleep(1) # <- give it some time to take effect assert get_rms_volume().amplitude < 0.001 # -60 dB

- Parameters:

duration_secs (int or float) – The window over which you should average, in seconds. Defaults to 3s in accordance with short-term loudness from the EBU TECH 3341 specification.

stream (Iterator[AudioChunk]) – Audio stream to measure. Defaults to

audio_chunks().

- Raises:

ZeroDivisionError – If

duration_secsis shorter than one sample orstreamcontains no samples.- Return type:

- class stbt.GrayscaleDiff( )#

Compares 2 frames by converting them to grayscale, calculating pixel-wise absolute differences, and ignoring differences below a threshold.

This was the default diffing algorithm for

wait_for_motionandpress_and_waitbefore v34.

- class stbt.Grid(

- region: Region,

- cols: int | None = None,

- rows: int | None = None,

- data: Sequence[Sequence[Any]] | None = None,

A grid with items arranged left to right, then down.

For example a keyboard, or a grid of posters, arranged like this:

ABCDE FGHIJ KLMNO

All items must be the same size, and the spacing between them must be consistent.

This class is useful for converting between pixel coordinates on a screen, to x & y indexes into the grid positions.

- Parameters:

region (Region) – Where the grid is on the screen.

cols (int) – Width of the grid, in number of columns.

rows (int) – Height of the grid, in number of rows.

data – A 2D array (list of lists) containing data to associate with each cell. The data can be of any type. For example, if you are modelling a grid-shaped keyboard, the data could be the letter at each grid position. If

datais specified, thencolsandrowsare optional.

- class Cell(index, position, region, data)#

A single cell in a

Grid.Don’t construct Cells directly; create a

Gridinstead.- Variables:

index (int) – The cell’s 1D index into the grid, starting from 0 at the top left, counting along the top row left to right, then the next row left to right, etc.

position (Position) –

The cell’s 2D index (x, y) into the grid (zero-based). For example in this grid “I” is index

8and position(x=3, y=1):ABCDE FGHIJ KLMNO

region (Region) – Pixel coordinates (relative to the entire frame) of the cell’s bounding box.

data – The data corresponding to the cell, if data was specified when you created the

Grid.

- get(

- index: int | None = None,

- position: PositionT | None = None,

- region: Region | None = None,

- data: Any = None,

Retrieve a single cell in the Grid.

For example, let’s say that you’re looking for the selected item in a grid by matching a reference image of the selection border. Then you can find the (x, y) position in the grid of the selection, like this:

selection = stbt.match("selection.png") cell = grid.get(region=selection.region) position = cell.position

You must specify one (and only one) of

index,position,region, ordata. For the meaning of these parameters seeGrid.Cell.A negative

indexcounts backwards from the end of the grid (so-1is the bottom right position).regiondoesn’t have to match the cell’s pixel coordinates exactly; instead, this returns the cell that contains the center of the given region.- Returns:

The

Grid.Cellthat matches the specified query; raisesIndexErrorif the index/position/region is out of bounds or the data is not found.

- class stbt.Image#

An image, possibly loaded from disk.

This is a subclass of

numpy.ndarray, which is the type that OpenCV uses to represent images.In addition to the members inherited from

numpy.ndarray,Imagedefines the following attributes:- Variables:

filename (str or None) – The filename that was given to

stbt.load_image.absolute_filename (str or None) – The absolute path resolved by

stbt.load_image.relative_filename (str or None) – The path resolved by

stbt.load_image, relative to the root of the test-pack git repo.

- stbt.is_screen_black(

- frame: Frame | None = None,

- mask: Mask | Region | str = Region.ALL,

- threshold: int | None = None,

- region: Region = Region.ALL,

Check for the presence of a black screen in a video frame.

- Parameters:

frame (Frame) – If this is specified it is used as the video frame to check; otherwise a new frame is grabbed from the device-under-test. This is an image in OpenCV format (for example as returned by

framesandget_frame).mask (str|numpy.ndarray|Mask|Region) – A

Regionor a mask that specifies which parts of the image to analyse. This accepts anything that can be converted to a Mask usingstbt.load_mask. See Regions and Masks.threshold (int) – Even when a video frame appears to be black, the intensity of its pixels is not always 0. To differentiate almost-black from non-black pixels, a binary threshold is applied to the frame. The

thresholdvalue is in the range 0 (black) to 255 (white). The global default (20) can be changed by settingthresholdin the[is_screen_black]section of .stbt.conf.region (Region) – Deprecated synonym for

mask. Usemaskinstead.

- Returns:

An object that will evaluate to true if the frame was black, or false if not black. The object has the following attributes:

black (bool) – True if the frame was black.

frame (

stbt.Frame) – The video frame that was analysed.

Changed in v33:

maskaccepts anything that can be converted to a Mask usingload_mask. Theregionparameter is deprecated; pass yourRegiontomaskinstead. You can’t specifymaskandregionat the same time.

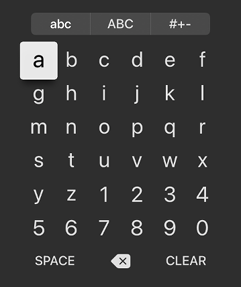

- class stbt.Keyboard(*, mask: Mask | Region | str = Region.ALL, navigate_timeout: float = 60)#

Models the behaviour of an on-screen keyboard.

You customize for the appearance & behaviour of the keyboard you’re testing by specifying two things:

A Directed Graph that specifies the navigation between every key on the keyboard. For example: When A is focused, pressing KEY_RIGHT on the remote control goes to B, and so on.

A Page Object that tells you which key is currently focused on the screen. See the

pageparameter toenter_textandnavigate_to.

The constructor takes the following parameters:

- Parameters:

mask (str|numpy.ndarray|Mask|Region) – A mask to use when calling

stbt.press_and_waitto determine when the current focus has finished moving. If the search page has a blinking cursor you need to mask out the region where the cursor can appear, as well as any other regions with dynamic content (such as a picture-in-picture with live TV). Seestbt.press_and_waitfor more details about the mask.navigate_timeout (int or float) – Timeout (in seconds) for

navigate_to. In practicenavigate_toshould only time out if you have a bug in your model or in the real keyboard under test.

For example, let’s model the lowercase keyboard from the YouTube search page on Apple TV:

# 1. Specify the keyboard's navigation model # ------------------------------------------ kb = stbt.Keyboard() # The 6x6 grid of letters & numbers: kb.add_grid(stbt.Grid(stbt.Region(x=125, y=175, right=425, bottom=475), data=["abcdef", "ghijkl", "mnopqr", "stuvwx", "yz1234", "567890"])) # The 3x1 grid of special keys: kb.add_grid(stbt.Grid(stbt.Region(x=125, y=480, right=425, bottom=520), data=[[" ", "DELETE", "CLEAR"]])) # The `add_grid` calls (above) defined the transitions within each grid. # Now we need to specify the transitions from the bottom row of numbers # to the larger keys below them: # # 5 6 7 8 9 0 # ↕ ↕ ↕ ↕ ↕ ↕ # SPC DEL CLR # # Note that `add_transition` adds the symmetrical transition (KEY_UP) # by default. kb.add_transition("5", " ", "KEY_DOWN") kb.add_transition("6", " ", "KEY_DOWN") kb.add_transition("7", "DELETE", "KEY_DOWN") kb.add_transition("8", "DELETE", "KEY_DOWN") kb.add_transition("9", "CLEAR", "KEY_DOWN") kb.add_transition("0", "CLEAR", "KEY_DOWN") # 2. A Page Object that describes the appearance of the keyboard # -------------------------------------------------------------- class SearchKeyboard(stbt.FrameObject): """The YouTube search keyboard on Apple TV""" @property def is_visible(self): # Implementation left to the reader. Should return True if the # keyboard is visible and focused. ... @property def focus(self): """Returns the focused key. Used by `Keyboard.enter_text` and `Keyboard.navigate_to`. Note: The reference image (focus.png) is carefully cropped so that it will match the normal keys as well as the larger "SPACE", "DELETE" and "CLEAR" keys. The middle of the image (where the key's label appears) is transparent so that it will match any key. """ m = stbt.match("focus.png", frame=self._frame) if m: return kb.find_key(region=m.region) else: return None # Your Page Object can also define methods for your test scripts to # use: def enter_text(self, text): return kb.enter_text(text.lower(), page=self) def clear(self): page = kb.navigate_to("CLEAR", page=self) stbt.press_and_wait("KEY_OK") return page.refresh()

For a detailed tutorial, including an example that handles multiple keyboard modes (lowercase, uppercase, and symbols) see our article Testing on-screen keyboards.

Changed in v33:

Added class

stbt.Keyboard.Key(the type returned fromfind_key). This used to be a private API, but now it is public so that you can use it in type annotations for your Page Object’sfocusproperty.Tries to recover from missed or double keypresses. To disable this behaviour specify

retries=0when callingenter_textornavigate_to.Increased default

navigate_timeoutfrom 20 to 60 seconds.

Changed in v34:

The property of the

pageobject should be calledfocus, notselection(for backward compatibility we still supportselection).

- class Key(

- name: str | None = None,

- text: str | None = None,

- region: Region | None = None,

- mode: str | None = None,

Represents a key on the on-screen keyboard.

This is returned by

stbt.Keyboard.find_key. Don’t create instances of this class directly.It has attributes

name,text,region, andmode. SeeKeyboard.add_key.

- add_key( )#

Add a key to the model (specification) of the keyboard.

- Parameters:

name (str) – The text or label you can see on the key.

text (str) – The text that will be typed if you press OK on the key. If not specified, defaults to

nameifnameis exactly 1 character long, otherwise it defaults to""(an empty string). An empty string indicates that the key doesn’t type any text when pressed (for example a “caps lock” key to change modes).region (stbt.Region) – The location of this key on the screen. If specified, you can look up a key’s name & text by region using

find_key(region=...).mode (str) – The mode that the key belongs to (such as “lowercase”, “uppercase”, “shift”, or “symbols”) if your keyboard supports different modes. Note that the same key, if visible in different modes, needs to be modelled as separate keys (for example

(name=" ", mode="lowercase")and(name=" ", mode="uppercase")) because their navigation connections are totally different: pressing up from the former goes to lowercase “c”, but pressing up from the latter goes to uppercase “C”.modeis optional if your keyboard doesn’t have modes, or if you only need to use the default mode.

- Returns:

The added key (

stbt.Keyboard.Key). This is an object that you can use withadd_transition.- Raises:

ValueErrorif the key is already present in the model.

- find_key(

- name: str | None = None,

- text: str | None = None,

- region: Region | None = None,

- mode: str | None = None,

Find a key in the model (specification) of the keyboard.

Specify one or more of

name,text,region, andmode(as many as are needed to uniquely identify the key).For example, your Page Object’s

focusproperty would do some image processing to find the position of the focus, and then usefind_keyto identify the focused key based on that region.- Returns:

A

stbt.Keyboard.Keyobject that unambiguously identifies the key in the model. It has “name”, “text”, “region”, and “mode” attributes. You can use this object as thesourceortargetparameter ofadd_transition.- Raises:

ValueErrorif the key does not exist in the model, or if it can’t be identified unambiguously (that is, if two or more keys match the given parameters).

- find_keys(

- name: str | None = None,

- text: str | None = None,

- region: Region | None = None,

- mode: str | None = None,

Find matching keys in the model of the keyboard.

This is like

find_key, but it returns a list containing any keys that match the given parameters. For example, if there is a space key in both the lowercase and uppercase modes of the keyboard, callingfind_keys(text=" ")will return a list of 2 objects[Key(text=" ", mode="lowercase"), Key(text=" ", mode="uppercase")].This method doesn’t raise an exception; the list will be empty if no keys matched.

- add_transition(

- source: Key | dict | str,

- target: Key | dict | str,

- keypress: str,

- mode: str | None = None,

- symmetrical: bool = True,

Add a transition to the model (specification) of the keyboard.

For example: To go from “A” to “B”, press “KEY_RIGHT” on the remote control.

- Parameters:

source – The starting key. This can be a

Keyobject returned fromadd_keyorfind_key; or it can be a dict that contains one or more of “name”, “text”, “region”, and “mode” (as many as are needed to uniquely identify the key usingfind_key). For convenience, a single string is treated as “name” (but this may not be enough to uniquely identify the key if your keyboard has multiple modes).target – The key you’ll land on after pressing the button on the remote control. This accepts the same types as

source.keypress (str) – The name of the key you need to press on the remote control, for example “KEY_RIGHT”.

mode (str) –

Optional keyboard mode that applies to both

sourceandtarget. For example, the two following calls are the same:add_transition("c", " ", "KEY_DOWN", mode="lowercase") add_transition({"name": "c", "mode": "lowercase"}, {"name": " ", "mode": "lowercase"}, "KEY_DOWN")

symmetrical (bool) – By default, if the keypress is “KEY_LEFT”, “KEY_RIGHT”, “KEY_UP”, or “KEY_DOWN”, this will automatically add the opposite transition. For example, if you call

add_transition("a", "b", "KEY_RIGHT")this will also add the transition("b", "a", "KEY_LEFT)". Set this parameter to False to disable this behaviour. This parameter has no effect ifkeypressis not one of the 4 directional keys.

- Raises:

ValueErrorif thesourceortargetkeys do not exist in the model, or if they can’t be identified unambiguously.

- add_edgelist( ) None#

Add keys and transitions specified in a string in “edgelist” format.

- Parameters:

edgelist (str) –

A multi-line string where each line is in the format

<source_name> <target_name> <keypress>. For example, the specification for a qwerty keyboard might look like this:''' Q W KEY_RIGHT Q A KEY_DOWN W E KEY_RIGHT ... '''

The name “SPACE” will be converted to the space character (” “). This is because space is used as the field separator; otherwise it wouldn’t be possible to specify the space key using this format.

Lines starting with “###” are ignored (comments).

mode (str) – Optional mode that applies to all the keys specified in

edgelist. Seeadd_keyfor more details about modes. It isn’t possible to specify transitions between different modes using this edgelist format; useadd_transitionfor that.symmetrical (bool) – See

add_transition.

- add_grid( ) Grid#

Add keys, and transitions between them, to the model of the keyboard.

If the keyboard (or part of the keyboard) is arranged in a regular grid, you can use

stbt.Gridto easily specify the positions of those keys. This only works if the columns & rows are all of the same size.If your keyboard has keys outside the grid, you will still need to specify the transitions from the edge of the grid onto the outside keys, using

add_transition. See the example above.- Parameters:

grid (stbt.Grid) – The grid to model. The data associated with each cell will be used for the key’s “name” attribute (see

add_key).mode (str) – Optional mode that applies to all the keys specified in

grid. Seeadd_keyfor more details about modes.merge (bool) – If True, adjacent keys with the same name and mode will be merged, and a single larger key will be added in its place.

- Returns:

A new

stbt.Gridwhere each cell’s data is a key object that can be used withadd_transition(for example to define additional transitions from the edges of this grid onto other keys).

- enter_text(

- text: str,

- page: FrameObject,

- verify_every_keypress: bool = False,

- retries: int = 2,

Enter the specified text using the on-screen keyboard.

- Parameters:

text (str) – The text to enter. If your keyboard only supports a single case then you need to convert the text to uppercase or lowercase, as appropriate, before passing it to this method.

page (stbt.FrameObject) –

An instance of a

stbt.FrameObjectsub-class that describes the appearance of the on-screen keyboard. It must implement the following:When you call enter_text,

pagemust represent the current state of the device-under-test.verify_every_keypress (bool) –

If True, we will read the focused key after every keypress and assert that it matches the model. If False (the default) we will only verify the focused key corresponding to each of the characters in

text. For example: to get from A to D you need to press KEY_RIGHT three times. The default behaviour will only verify that the focused key is D after the third keypress. This is faster, and closer to the way a human uses the on-screen keyboard.Set this to True to help debug your model if

enter_textis behaving incorrectly.retries (int) – Number of recovery attempts if a keypress doesn’t have the expected effect according to the model. Allows recovering from missed keypresses and double keypresses.

- Returns:

A new FrameObject instance of the same type as

page, reflecting the device-under-test’s new state after the keyboard navigation completed.

Typically your FrameObject will provide its own

enter_textmethod, so your test scripts won’t call thisKeyboardclass directly. See the example above.

-

) FrameObject#

Move the focus to the specified key.

This won’t press KEY_OK on the target; it only moves the focus there.

- Parameters:

target – This can be a Key object returned from

find_key, or it can be a dict that contains one or more of “name”, “text”, “region”, and “mode” (as many as are needed to identify the key usingfind_keys). If more than one key matches the given parameters,navigate_towill navigate to the closest one. For convenience, a single string is treated as “name”.page (stbt.FrameObject) – See

enter_text.verify_every_keypress (bool) – See

enter_text.retries (int) – See

enter_text.

- Returns:

A new FrameObject instance of the same type as

page, reflecting the device-under-test’s new state after the keyboard navigation completed.

- class stbt.Keypress#

Information about a keypress sent with

stbt.press.- start_time: float#

The time just before the keypress started (in seconds since the unix epoch, like

time.time()andstbt.Frame.time).

- frame_before: stbt.Frame#

The most recent video-frame just before the keypress started. Typically this is used by functions like

stbt.press_and_waitto detect when the device-under-test reacted to the keypress.

- stbt.last_keypress() Keypress | None#

Returns information about the last key-press sent to the device under test.

See the return type of

stbt.press.

- class stbt.Learning#

An enumeration.

- NONE = 0#

Don’t learn the menu structure for future calls to

navigate_1dornavigate_grid.

- TEMPORARY = 1#

Learn the menu structure to speed up future calls to

navigate_1dornavigate_gridduring this test-run (that is, during the lifetime of the Python process).

- PERSISTENT = 2#

Learn the menu structure and save it to a persistent cache on the Stb-tester Node so that it can be used to speed up future calls to

navigate_1dornavigate_gridwhen running tests on the same Stb-tester Node.

- stbt.load_image(filename: Image | str) Image#

- stbt.load_image( ) Image

- stbt.load_image( ) Image

Find & read an image from disk.

If given a relative filename, this will search in the directory of the Python file that called

load_image, then in the directory of that file’s caller, and so on, until it finds the file. This allows you to useload_imagein a helper function that takes a filename from its caller.Finally this will search in the current working directory. This allows loading an image that you had previously saved to disk during the same test run.

This is the same search algorithm used by

stbt.matchand similar functions.- Parameters:

filename (str) – A relative or absolute filename.

flags – Flags to pass to

cv2.imread. Deprecated; usecolor_channelsinstead.Tuple of acceptable numbers of color channels for the output image: 1 for grayscale, 3 for color, and 4 for color with an alpha (transparency) channel. For example,

color_channels=(3, 4)will accept color images with or without an alpha channel. Defaults to(3, 4).If the image doesn’t match the specified

color_channelsit will be converted to the specified format.

- Return type:

- Returns:

An image in OpenCV format — that is, a

numpy.ndarrayof 8-bit values. With the defaultcolor_channelsparameter this will be 3 channels BGR, or 4 channels BGRA if the file has transparent pixels.- Raises:

IOErrorif the specified path doesn’t exist or isn’t a valid image file.

Changed in v33: Added the

color_channelsparameter and deprecatedflags. The image will always be converted to the format specified bycolor_channels(previously it was only converted to the format specified byflagsif it was given as a filename, not as astbt.Imageor numpy array). The returned numpy array is read-only.

- stbt.load_mask(mask: Mask | Region | str) Mask#

Used to load a mask from disk, or to create a mask from a

Region.A mask is a black & white image (the same size as the video-frame) that specifies which parts of the frame to process: White pixels select the area to process, black pixels the area to ignore.

In most cases you don’t need to call

load_maskdirectly; Stb-tester’s image-processing functions such asis_screen_black,press_and_wait, andwait_for_motionwill callload_maskwith theirmaskparameter. This function is a public API so that you can use it if you are implementing your own image-processing functions.Note that you can pass a

Regiondirectly to themaskparameter of stbt functions, and you can create more complex masks by adding, subtracting, or inverting Regions (see Regions and Masks).- Parameters:

A relative or absolute filename of a mask PNG image. If given a relative filename, this uses the algorithm from

load_imageto find the file.Or, a

Regionthat specifies the area to process.- Returns:

A mask as used by

is_screen_black,press_and_wait,wait_for_motion, and similar image-processing functions.

Added in v33.

- class stbt.Mask#

Internal representation of a mask.

Most users will never need to use this type directly; instead, pass a filename or a

Regionto themaskparameter of APIs likestbt.wait_for_motion. See Regions and Masks.- static from_alpha_channel(image: Image | str) Mask#

Create a mask from the alpha channel of an image.

- Parameters:

image (string or

numpy.ndarray) –An image with an alpha (transparency) channel. This can be the filename of a png file on disk, or an image previously loaded with

stbt.load_image.Filenames should be relative paths. See

stbt.load_imagefor the path lookup algorithm.

- to_array( ) tuple[ndarray | None, Region]#

Materialize the mask to a numpy array of the specified size.

Most users will never need to call this method; it’s for people who are implementing their own image-processing algorithms.

- Parameters:

region (stbt.Region) – A Region matching the size of the frame that you are processing.

color_channels (int) – The number of channels required (1 or 3), according to your image-processing algorithm’s needs. All channels will be identical — for example with 3 channels, pixels will be either [0, 0, 0] or [255, 255, 255].

- Return type:

tuple[numpy.ndarray | None, Region]

- Returns:

A tuple of:

An image (numpy array), where masked-in pixels are white (255) and masked-out pixels are black (0). The array is the same size as the region in the second member of this tuple.

A bounding box (

stbt.Region) around the masked-in area. If most of the frame is masked out, limiting your image-processing operations to this region will be faster.

If the mask is just a Region, the first member of the tuple (the image) will be

Nonebecause the bounding-box is sufficient.

- stbt.match(

- image: Image | str,

- frame: Frame | None = None,

- match_parameters: MatchParameters | None = None,

- region: Region = Region.ALL,

Search for an image in a single video frame.

- Parameters:

image (string or

numpy.ndarray) –The image to search for. It can be the filename of a png file on disk, or a numpy array containing the pixel data in 8-bit BGR format. If the image has an alpha channel, any transparent pixels are ignored.

Filenames should be relative paths. See

stbt.load_imagefor the path lookup algorithm.8-bit BGR numpy arrays are the same format that OpenCV uses for images. This allows generating reference images on the fly (possibly using OpenCV) or searching for images captured from the device-under-test earlier in the test script.

frame (

stbt.Frameornumpy.ndarray) – If this is specified it is used as the video frame to search in; otherwise a new frame is grabbed from the device-under-test. This is an image in OpenCV format (for example as returned byframesandget_frame).match_parameters (

MatchParameters) – Customise the image matching algorithm. SeeMatchParametersfor details.region (

Region) – Only search within the specified region of the video frame.

- Returns:

A

MatchResult, which will evaluate to true if a match was found, false otherwise.

- stbt.match_all(

- image: Image | str,

- frame: Frame | None = None,

- match_parameters: MatchParameters | None = None,

- region: Region = Region.ALL,

Search for all instances of an image in a single video frame.

Arguments are the same as

match.- Returns:

An iterator of zero or more

MatchResultobjects (one for each position in the frame whereimagematches).

Examples:

all_buttons = list(stbt.match_all("button.png"))

for match_result in stbt.match_all("button.png"): # do something with match_result here ...

- stbt.match_text(

- text: str,

- frame: Frame | None = None,

- region: Region = Region.ALL,

- mode: OcrMode = OcrMode.PAGE_SEGMENTATION_WITHOUT_OSD,

- lang: str | None = None,

- tesseract_config: dict[str, bool | str | int] | None = None,

- case_sensitive: bool = False,

- upsample: bool | None = None,

- text_color: Color | None = None,

- text_color_threshold: float | None = None,

- engine: OcrEngine | None = None,

- char_whitelist: str | None = None,

Search for the specified text in a single video frame.

This can be used as an alternative to

match, searching for text instead of an image.- Parameters:

text (str) – The text to search for.

frame – See

ocr.region – See

ocr.mode – See

ocr.lang – See

ocr.tesseract_config – See

ocr.upsample – See

ocr.text_color – See

ocr.text_color_threshold – See

ocr.engine – See

ocr.char_whitelist – See

ocr.case_sensitive (bool) – Ignore case if False (the default).

- Returns:

A

TextMatchResult, which will evaluate to True if the text was found, false otherwise.

For example, to select a button in a vertical menu by name (in this case “TV Guide”):

m = stbt.match_text("TV Guide") assert m.match while not stbt.match('selected-button.png').region.contains(m.region): stbt.press('KEY_DOWN')

- class stbt.MatchMethod#

An enum. See

MatchParametersfor documentation of these values.- SQDIFF = 'sqdiff'#

- SQDIFF_NORMED = 'sqdiff-normed'#

- CCORR_NORMED = 'ccorr-normed'#

- CCOEFF_NORMED = 'ccoeff-normed'#

- class stbt.MatchParameters(

- match_method: MatchMethod | None = None,

- match_threshold: float | None = None,

- confirm_method: ConfirmMethod | None = None,

- confirm_threshold: float | None = None,

- erode_passes: int | None = None,

Parameters to customise the image processing algorithm used by

match,wait_for_match, andpress_until_match.You can change the default values for these parameters by setting a key (with the same name as the corresponding python parameter) in the

[match]section of .stbt.conf. But we strongly recommend that you don’t change the default values from what is documented here.You should only need to change these parameters when you’re trying to match a reference image that isn’t actually a perfect match – for example if there’s a translucent background with live TV visible behind it; or if you have a reference image of a button’s background and you want it to match even if the text on the button doesn’t match.

- Parameters:

match_method (

MatchMethod) – The method to be used by the first pass of stb-tester’s image matching algorithm, to find the most likely location of the reference image within the larger source image. For details see OpenCV’scv2.matchTemplate. Defaults toMatchMethod.SQDIFF.match_threshold (float) – Overall similarity threshold for the image to be considered a match. This threshold applies to the average similarity across all pixels in the image. Valid values range from 0 (anything is considered to match) to 1 (the match has to be pixel perfect). Defaults to 0.98.

confirm_method (

ConfirmMethod) –The method to be used by the second pass of stb-tester’s image matching algorithm, to confirm that the region identified by the first pass is a good match.

The first pass often gives false positives: It can report a “match” for an image with obvious differences, if the differences are local to a small part of the image. The second pass is more CPU-intensive, but it only checks the position of the image that the first pass identified. The allowed values are:

- ConfirmMethod.NONE:

Do not confirm the match. This is useful if you know that the reference image is different in some of the pixels. For example to find a button, even if the text inside the button is different.

- ConfirmMethod.ABSDIFF:

Compare the absolute difference of each pixel from the reference image against its counterpart from the candidate region in the source video frame.

- ConfirmMethod.NORMED_ABSDIFF:

Normalise the pixel values from both the reference image and the candidate region in the source video frame, then compare the absolute difference as with

ABSDIFF.This method is better at noticing differences in low-contrast images (compared to the

ABSDIFFmethod), but it isn’t suitable for reference images that don’t have any structure (that is, images that are a single solid color without any lines or variation).This is the default method, with a default

confirm_thresholdof 0.70.

confirm_threshold (float) –

The minimum allowed similarity between any given pixel in the reference image and the corresponding pixel in the source video frame, as a fraction of the pixel’s total luminance range.

Unlike

match_threshold, this threshold applies to each pixel individually: Any pixel that exceeds this threshold will cause the match to fail (but seeerode_passesbelow).Valid values range from 0 (less strict) to 1.0 (more strict). Useful values tend to be around 0.84 for

ABSDIFF, and 0.70 forNORMED_ABSDIFF. Defaults to 0.70.erode_passes (int) – After the

ABSDIFForNORMED_ABSDIFFabsolute difference is taken, stb-tester runs an erosion algorithm that removes single-pixel differences to account for noise and slight rendering differences. Useful values are 1 (the default) and 0 (to disable this step).

- class stbt.MatchResult#

The result from

match.- Variables:

time (float) – The time at which the video-frame was captured, in seconds since 1970-01-01T00:00Z. This timestamp can be compared with system time (

time.time()).match (bool) – True if a match was found. This is the same as evaluating

MatchResultas a bool. That is,if result:will behave the same asif result.match:.region (Region) – Coordinates where the image was found (or of the nearest match, if no match was found).

first_pass_result (float) – Value between 0 (poor) and 1.0 (excellent match) from the first pass of stb-tester’s image matching algorithm (see

MatchParametersfor details).frame (Frame) – The video frame that was searched, as given to

match.image (Image) – The reference image that was searched for, as given to

match.

- exception stbt.MatchTimeout#

Bases:

stbt.UITestFailureException raised by

wait_for_match.- Variables:

screenshot (Frame) – The last video frame that

wait_for_matchchecked before timing out.expected (str) – Filename of the image that was being searched for.

timeout_secs (int or float) – Number of seconds that the image was searched for.

- class stbt.MotionResult#

The result from

detect_motionandwait_for_motion.- Variables:

time (float) – The time at which the video-frame was captured, in seconds since 1970-01-01T00:00Z. This timestamp can be compared with system time (

time.time()).motion (bool) – True if motion was found. This is the same as evaluating

MotionResultas a bool. That is,if result:will behave the same asif result.motion:.region (Region) – Bounding box where the motion was found, or

Noneif no motion was found.frame (Frame) – The video frame in which motion was (or wasn’t) found.

- exception stbt.MotionTimeout#

Bases:

stbt.UITestFailureException raised by

wait_for_motion.- Variables:

screenshot (Frame) – The last video frame that

wait_for_motionchecked before timing out.mask (Mask or None) – The mask that was used, if any.

timeout_secs (int or float) – Number of seconds that motion was searched for.

- class stbt.MultiPress(

- key_mapping: dict[str, str] | None = None,

- interpress_delay_secs: float | None = None,

- interletter_delay_secs: float = 1,

Helper for entering text using multi-press on a numeric keypad.

In some apps, the search page allows entering text by pressing the keys on the remote control’s numeric keypad: press the number “2” once for “A”, twice for “B”, etc.:

1., ABC2 DEF3 GHI4 JKL5 MNO6 PQRS7 TUV8 WXYZ9 [space]0

To enter text with this mechanism, create an instance of this class and call its

enter_textmethod. For example:multipress = stbt.MultiPress() multipress.enter_text("teletubbies")

The constructor takes the following parameters:

- Parameters:

key_mapping (dict) –

The mapping from number keys to letters. The default mapping is:

{ "KEY_0": " 0", "KEY_1": "1.,", "KEY_2": "abc2", "KEY_3": "def3", "KEY_4": "ghi4", "KEY_5": "jkl5", "KEY_6": "mno6", "KEY_7": "pqrs7", "KEY_8": "tuv8", "KEY_9": "wxyz9", }

This matches the arrangement of digits A-Z from ITU E.161 / ISO 9995-8.

The value you pass in this parameter is merged with the default mapping. For example to override the punctuation characters you can specify

key_mapping={"KEY_1": "@1.,-_"}.The dict’s key names must match the remote-control key names accepted by

stbt.press. The dict’s values are a string or sequence of the corresponding letters, in the order that they are entered when pressing that key.interpress_delay_secs (float) – The time to wait between every key-press, in seconds. This defaults to 0.3, the same default as

stbt.press.interletter_delay_secs (float) – The time to wait between letters on the same key, in seconds. For example, to enter “AB” you need to press key “2” once, then wait, then press it again twice. If you don’t wait, the device-under-test would see three consecutive keypresses which mean the letter “C”.

- target: str,

- page: FrameObject,

- direction: Direction,

- *,

- mask: Mask | Region | str = Region.ALL,

- timeout_secs: float = 120,

- get_focus: Callable[[FrameObject], str] | None = None,

- eq: Callable[[str, str], bool] | None = None,

- press_and_wait: Callable[[str], Transition | TransitionStatus] | None = None,

- recover: str | Callable[[NavigationState], FrameObject] | None = None,

- retry_missed_keypresses: bool = False,

- learning: Learning = Learning.PERSISTENT,

- id: str | None = None,

Navigate through a 1-dimensional menu, searching for a target.

This doesn’t press KEY_OK on the target; it only moves the focus there.

navigate_1dtakes apageparameter, which is a Page Object that describes the current state of the menu. The Page Object must have a property calledfocus.navigate_1dwill search the menu until the current focus (according to the Page Object) matchestarget.Typically this function is used from a Page Object’s navigate method; don’t use this directly in your test scripts. For example:

class Menu(stbt.FrameObject): @property def is_visible(self): ... # implementation not shown @property def focus(self): '''Read the currently focused menu item.''' return stbt.ocr(frame=self._frame, region=...) def navigate_to(self, target): return stbt.navigate_1d(target, page=self, direction=stbt.Direction.HORIZONTAL)

- Parameters:

target – Navigate to this menu item. Typically a string.

page – An instance of a

stbt.FrameObjectsub-class that represents the current state of the menu. We will use this object’sfocusproperty to determine which item is currently focused. After we have pressed up, down, left, or right, we will call this object’srefreshmethod to read the new state from the screen.direction – Direction to move in: Either

Direction.VERTICAL(for navigation with “KEY_DOWN” and “KEY_UP”) orDirection.HORIZONTAL(for navigation with “KEY_RIGHT” and “KEY_LEFT”).mask – A mask to use when calling

stbt.press_and_waitto determine when the current focus has finished moving. Usually this will be aRegionspecifying where the menu is on the screen, but it can also be a more complex mask.timeout_secs – Raise

NavigationFailedif we still haven’t found the target after this many seconds.get_focus – By default,

navigate_1dwill use thepageobject’sfocusproperty to determine which item is currently focused. You can override this behaviour by providing a function that takes a single argument (thepageinstance) and returns the focused menu item.eq – A function that compares two strings (the current focus and the target), and returns True if they match. The default is

stbt.ocr_eq, which ignores common OCR errors.press_and_wait –

navigate_1dwill callstbt.press_and_waitwith the appropriate key (“KEY_DOWN”, “KEY_UP”, “KEY_LEFT”, or “KEY_RIGHT”) to move the focus and wait until it has finished moving. If you need to customise the parameters topress_and_wait, you can pass in your own function here: It should accept a key name and call the realstbt.press_and_waitwith your extra parameters. For example:def my_press_and_wait(key): return stbt.press_and_wait( key, mask=stbt.Region(...), timeout_secs=10) ... return stbt.navigate_1d( target, page=self, direction=stbt.Direction.HORIZONTAL, press_and_wait=my_press_and_wait)

In many cases a

lambda(anonymous function) is more convenient:return stbt.navigate_1d( target, page=self, direction=stbt.Direction.HORIZONTAL, press_and_wait=lambda key: stbt.press_and_wait( key, mask=stbt.Region(...), timeout_secs=10))

recover –

A function that will recover if navigation “falls off” the edge of the menu onto a different page.

”Falling off” means that

page.is_visibleis False after navigating up, down, left or right.By default,

navigate_1dwill try to recover by pressing the opposite key (for example, “KEY_LEFT” if it had fallen off after pressing “KEY_RIGHT”), and if that fails, it will try “KEY_BACK”. To use a different recovery strategy, specify your own function here.The function should take a single argument of type

stbt.NavigationState. It should do the appropriate actions to get back onto the menu that we were navigating, and then return a new Page Object that represents the new state of the menu. For example:def my_recover(state: stbt.NavigationState): assert stbt.press_and_wait("KEY_BACK") return Menu() stbt.navigate_1d(..., recover=my_recover)

If necessary, you can check the function’s parameter to see the previous Page Object (before falling off) and the last key that was pressed.

Your recovery function doesn’t have to get back to the same menu item that was focused before falling off; it just needs to get back onto the menu. For example, if your Page Object has a staticmethod called

openthat will open the menu from any state, you can use that to recover, like this:stbt.navigate_1d(..., recover=lambda _: Menu.open())

The

recoverparameter can also be a string, which is the name of a key forstbt.press.navigate_1dwill attempt to recover by pressing that key once.learning –

We can remember the items we discovered during navigation so that the next time you call

navigate_1dwe can use that information to navigate faster. To disable this behaviour, passlearning=stbt.Learning.NONE. Seestbt.Learning.If the actual menu of the device-under-test doesn’t match the learned menu structure, this function will still work — it will fall back to the searching behaviour.

id – A unique identifier for this menu. You only need to specify this if

learningis enabled and you are using the same FrameObject class to recognize many different menus and sub-menus.

- Returns:

A new FrameObject instance of the same type as

page, reflecting the device-under-test’s new state after the navigation completed.- Raises:

NavigationFailed – If we can’t find the target.

Added in v34.

- target: str,

- page: FrameObject,

- *,

- mask: Mask | Region | str = Region.ALL,

- timeout_secs: float = 300,

- get_focus: Callable[[FrameObject], str] | None = None,

- eq: Callable[[str, str], bool] | None = None,

- press_and_wait: Callable[[str], Transition | TransitionStatus] | None = None,

- recover: str | Callable[[NavigationState], FrameObject] | None = None,

- retry_missed_keypresses: bool = False,

- learning: Learning = Learning.PERSISTENT,

- id: str | None = None,

Navigate through a 2-dimensional grid, searching for a target.

This doesn’t press KEY_OK on the target; it only moves the focus there.

See

navigate_1dfor documentation on all the parameters.Added in v34.

Bases:

AssertionErrorRaised by

navigate_1dornavigate_gridif it couldn’t find the requested target.

State of the device-under-test during navigation.

Passed to the

recoverparameter ofnavigate_1dandnavigate_grid.Latest frame of video from the device-under-test — that is, its current state.

The last Page Object seen when the page was still visible (before navigation “fell off” the edge of the menu onto a different page).

The last key pressed during navigation, that caused it to “fall off” the edge.

- stbt.ocr(

- frame: Frame | None = None,

- region: Region = Region.ALL,

- mode: OcrMode = OcrMode.PAGE_SEGMENTATION_WITHOUT_OSD,

- lang: str | None = None,

- tesseract_config: dict[str, bool | str | int] | None = None,

- tesseract_user_words: list[str] | str | None = None,

- tesseract_user_patterns: list[str] | str | None = None,

- upsample: bool | None = None,

- text_color: Color | None = None,

- text_color_threshold: float | None = None,

- engine: OcrEngine | None = None,

- char_whitelist: str | None = None,

- corrections: dict[Pattern | str, str] | None = None,

Return the text present in the video frame as a Unicode string.

Perform OCR (Optical Character Recognition) using the “Tesseract” open-source OCR engine.

- Parameters:

frame (Frame) – If this is specified it is used as the video frame to process; otherwise a new frame is grabbed from the device-under-test.

region (Region) – Only search within the specified region of the video frame.

mode (OcrMode) – Tesseract’s layout analysis mode.

lang (str) –

The three-letter ISO-639-3 language code of the language you are attempting to read; for example “eng” for English or “deu” for German. More than one language can be specified by joining with ‘+’: for example “eng+deu” means that the text to be read may be in a mixture of English and German.

This defaults to “eng” (English). You can change the global default value by setting

langin the[ocr]section of .stbt.conf or the appropriate Node-specific configuration file.You may need to install the tesseract language pack; see installation instructions here.

tesseract_config (dict) – Allows passing configuration down to the underlying OCR engine. See the tesseract documentation for details.

tesseract_user_words (unicode string, or list of unicode strings) – List of words to be added to the tesseract dictionary. To replace the tesseract system dictionary altogether, also set

tesseract_config={'load_system_dawg': False, 'load_freq_dawg': False}.tesseract_user_patterns (unicode string, or list of unicode strings) –

List of patterns to add to the tesseract dictionary. The tesseract pattern language corresponds roughly to the following regular expressions:

tesseract regex ========= =========== \c [a-zA-Z] \d [0-9] \n [a-zA-Z0-9] \p [:punct:] \a [a-z] \A [A-Z] \* *

upsample (bool) – Upsample the image 3x before passing it to tesseract. This helps to preserve information in the text’s anti-aliasing that would otherwise be lost when tesseract binarises the image. This defaults to

True; you can override the global default value by settingupsample=Falsein the[ocr]section of .stbt.conf. You should set this to False if the text is already quite large, or if you are doing your own binarisation (pre-processing the image to make it black and white).text_color (Color) – Color of the text. Specifying this can improve OCR results when tesseract’s default thresholding algorithm doesn’t detect the text, for example white text on a light-colored background or text on a translucent overlay with dynamic content underneath.

text_color_threshold (int) – The threshold to use with

text_color, between 0 and 255. Defaults to 25. You can override the global default value by settingtext_color_thresholdin the[ocr]section of .stbt.conf.engine (OcrEngine) – The OCR engine to use. Defaults to